Process

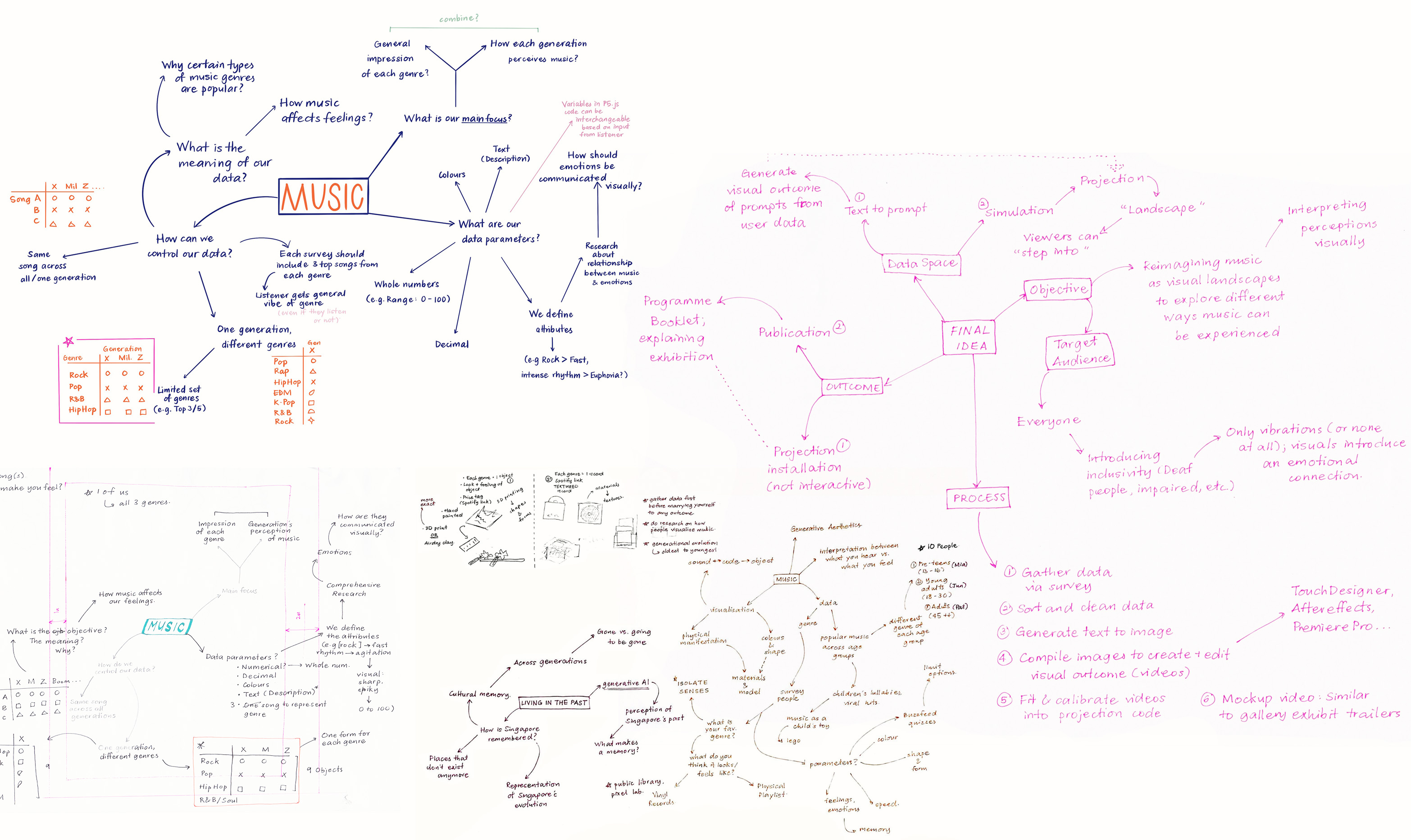

Among the ideas we first brainstormed with, this is one we felt excited to pursue because all 3 of us could relate to music (who doesn't right?) And that's exactly the point! Whether or not people do relate deeply to music, it plays a pivotal part of life, history and culture. It evokes emotions, thoughts and memories in people, even if they don't realise it. This idea focuses on the music and making it tangible, or at least what is perceived of it.

The whole idea is all about music, but our focus shifts throughout the process of refining our final outcome. In this website, you'll find out more about the processes, thoughts, discussions and methods of our work and how we arrived to our final.

From the very beginning, our main topic has always been music. We wanted to make the meaning of our data significant. What sparked our idea was this project by Tracy Cornish, who found a way to map 2D malfunctions into 3D objects with 3D printing. Like music, digital glitches and issues have no specific parameters that are characterized by a certain attribute (How are the colors decided? What is the data that affect its form in terms of sharpness, size, etc?). To see what they look like as physical objects are beautiful outcomes and we wanted to achieve this as well with music too.

Background

Imagine you're listening to your favourite song with your eyes closed. What are the thoughts, memories, or emotions that the music evokes? Imagine if there was a way to foster deeper connections between individuals and their favourite tunes?

Our project seeks to bring these music to life by connecting sound and visuals. The main idea revolves around transforming music genres, based on the perceptions of different individuals, into interactive 3D landscapes. These imaginary musical worlds visually represent the way people respond to music, allowing us to have a deeper understanding of abstract concepts like sound and grasp the subjective nature of personal experiences tied to music.

When music is reconnected with physicality, we engage more fully with music by involving multiple senses creating a richer, more immersive experience. By creating instruments for social change and a tool for music education, these landscapes allow people an enhanced experience of music appreciation.

Proposed objective

Essentially, a Musical Odyssey is a form of visual storytelling that challenges the preconceived notions of different music genres and styles. We want to explore the hidden potential of music and create new forms of musical expressions, in this case; an integration of sound, space, and movement.

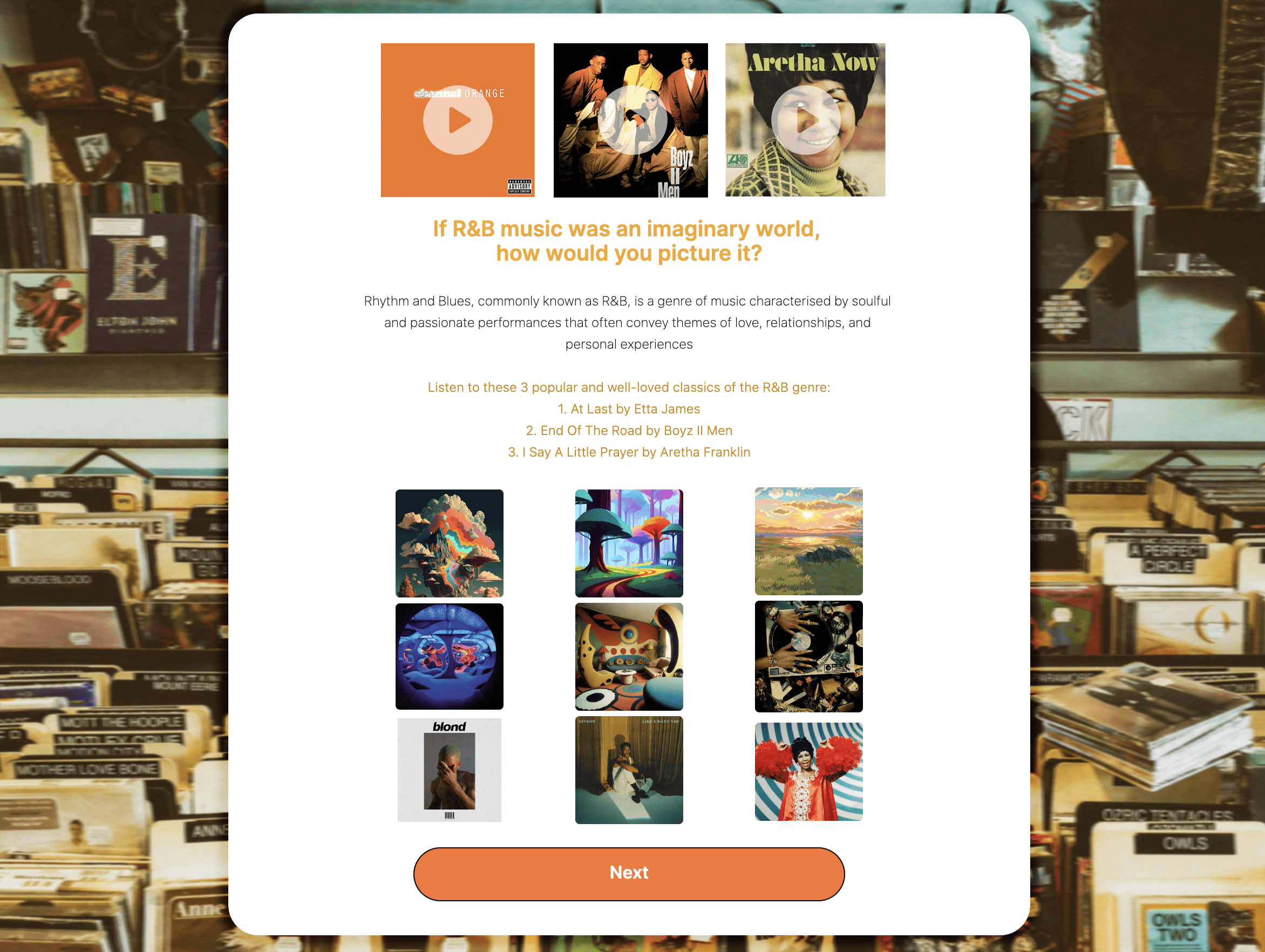

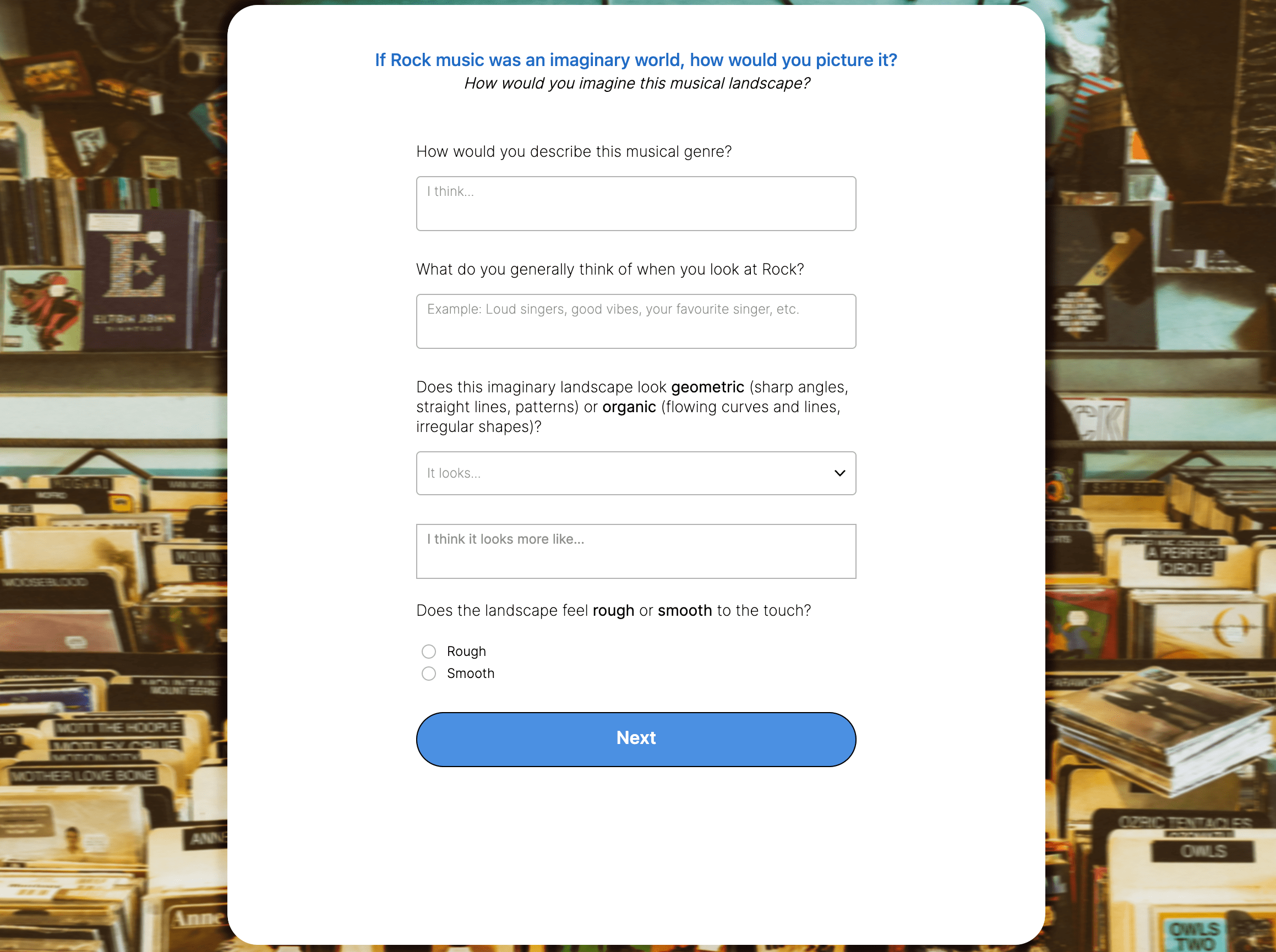

Our project will depend mainly on qualitative data. With the many genres and subgenres that exist in the musical world, our scope has been narrowed down to some of the most popular genres in the world; Rock, R&B and Pop. Each genre will have different physical representations based on the visualisations of various individuals, allowing us to create and experiment with diverse landscapes and visuals of various styles and levels of creativity.

Proposed approach

For data collection, our forms are created in Feathery, a platform that allows a detailed form to be crafted, with fields like colour pickers and text area fields. A comprehensive list of curated questions tailored to our study has been included, as well as visual (music videos, aesthetics) and textual (helping words, ideas) aid to help our audience understand our project and its questions better. Once our data has been collected, we will organise and sort our data to understand the general consensus. This can be done in Excel. Afterwards, as a group we have to go through all the data to select what is significant and meaningful, omitting any erroneous or isolated data.

Data Collection

We divided the generational data into 3 main generations: generation Z (1997–2010), millennials (1981–1996) and generation X (1965–1980).

The questions in our form follow 4 main categories; visual perception, sesnory experience, emotional response and personal connection. We opened our survey to anyone and everyone, whether or not they enjoy music. Having plenty of data is way better than working with few, so we wanted our questions to be as comprehensive as possible, and encourage participants to be as descriptive as possible in their responses. The questions below are some of many questions we brainstormed over the weeks of the project. While there were many, identifying which questions elicited the best types of answers that was suitable for generative AI was also an important part of the process.

Emotional Response

What emotions does this landscape evoke in you?

Can you find any elements in this landscape that make you feel nostalgic or reminiscent of a past experience?

Do you associate any personal memories or feelings with this landscape?

Visual Perception

Are there any visual elements that capture your attention?

Can you imagine a color palette that reflects the overall vibe of a specific music genre?

What aspects contribute to its aesthetic appeal?

Sensory Experience

Can you imagine the texture of the surfaces you see in this landscape?

Would you describe the landscape as peaceful or chaotic in terms of sensory experience?

Does the spatial arrangement of sound influence your perception of a musical landscape's depth?

Personal Connection

How would you feel if you were physically present in this landscape right now?

Does this landscape connect with any personal interests or passions you have?

How does this landscape align with your own sense of aesthetics?

Artefact

Our final artefact is a combination of multiple data spaces offered. At first our final outcome meant to be a physical object that was meant to be interactive and tactile, a tangible representation of such perceptions. However, with much refinement, our final outcome is that of this few: data visualization, visual archive (image collection), text prompt (generative image and video AI) and simulation (augmented reality filter).

Throughout this entire project, we have constantly put ourselves in positions of constant learning because of how we had to explore as many suitable methods and softwars such as TouchDesigner, Spark AI, Blender and many generative AI engines.

While our final outcome was not what we had originally planned, our group is fully content with how we stayed resilient despite many challenges and confusion.

Based on people's responses, we compiled the generated landscapes from each musical genre into a video to create an immersive experience.

Conclusion

The journey of this project proved to be quite challenging. We encountered numerous hurdles, making continuous adjustments and refinements to our work as we moved forward. Our ideas went through several transformations, leading to a significant change in our artifact. Initially envisioned as a tangible object, it evolved into an entirely different outcome, using AI generation to construct an immersive musical world. Despite the constant modifications and feedback, our final result successfully embodied our intitial objective - visualising how individuals imagine music. The extensive and intricate discussions, along with the trials we faced throughout the project, definitely proved worthwhile in the end.